This is my mother-in-law's Christmas Cookie Cookbook.

Greg Fairbrother

Tuesday, March 3, 2026

Monday, September 22, 2025

Saturday, August 26, 2023

Catholic Bible Word Search

Available now on Amazon is my Catholic Bible Large Print Word Search which features:

- 84 Word Searches

- Many Puzzle Shapes

- Answers

- 18pt font size

- Large Print

Wednesday, March 8, 2023

Read Catholic Bible in a Year

- Reading from the Old Testament

- Reading from one of these: Psalms, Job, Proverbs, Ecclesiastes, Song, Wisdom, Sirach

- Reading from the New Testament

Please note you will need a copy of the Catholic Bible as the text is not included with this journal. Here are some options for the Bible text:

- My iOS app “Catholic New American Bible RE” offers the text along with the same read bible in a year plan.

- My New American Bible Revised Edition eBook is available on:

- My "Bible for Catholics" iOS app -- This is the Catholic Public Domain Version (CPDV) of the Holy Bible. It is not the New American Bible(NAB) and thus some parts will be slightly different than the NAB.

Wednesday, November 16, 2022

My Catholic Bible Apps

These are my Bible apps and ebooks:

- New American Bible Revised Edition (NABRE) Bible -- These have been approved by the United States Conference of Catholic Bishops (USCCB) and is the current version of the Catholic Bible. It is available for these devices:

- Bible for Catholics -- This is the Catholic Public Domain Version (CPDV) of the Holy Bible. It is not the New American Bible(NAB) and thus some parts will be slightly different than the NAB.

- All-In-1 -- This iPhone includes: NABRE Bible, Catholic Prayers, Liturgical Calendar, Local Catholic Places, Order of the Mass, ...

Thursday, August 18, 2022

Database Links using Autonomous Database with private endpoints across regions

I am using Autonomous Databases 19c shared infrastructure(ADB-S) with the source in Phoenix and the target in London.

Prereqs include:

- Two ADB-S with private endpoints , one in each region

- Region peering configured

- DNS configured -- I like to create a compute VM in each region and verify region peering and DNS is working

- Upload the target database wallet to object storage

- Create the directory in the database to hold the wallet in the source database

- Create the credential to access object storage in the source database

- Copy the wallet from object storage to the source database

- Create another credential to access target database in the source database

- Create the database link

create directory wallet_dir_london as 'WALLETDIR_LONDON';

begin

DBMS_CLOUD.create_credential (

credential_name => 'OBJ_STORE_CREDA',

username => 'YOUROCIUSERNAME',

password => 'YOURTOKEN

) ;

end;

/

begin DBMS_CLOUD.GET_OBJECT(

credential_name => 'OBJ_STORE_CREDA',

object_uri => 'https://objectstorage.us-phoenix-1.oraclecloud.com/n/YOURTENANCY/b/gfswinglondon/o/cwallet.sso',

directory_name => 'WALLET_DIR_LONDON');

END;

/

SELECT * FROM DBMS_CLOUD.LIST_FILES('WALLET_DIR_LONDON');

BEGIN

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'DB_LINK_CRED',

username => 'ADMIN',

password => 'YOURADBPASSWORD');

END;

/

SELECT owner, credential_name FROM dba_credentials

BEGIN

DBMS_CLOUD_ADMIN.CREATE_DATABASE_LINK(

db_link_name => 'LONDONDBLINK',

hostname => '*********.adb.uk-london-1.oraclecloud.com', (your hostname here)

port => '1522',

service_name => '**************_gfswinglon_high.adb.oraclecloud.com', (your service name here)

ssl_server_cert_dn => 'CN=adwc.eucom-central-1.oraclecloud.com, OU=Oracle BMCS FRANKFURT, O=Oracle Corporation, L=Redwood City, ST=California, C=US',

credential_name => 'DB_LINK_CRED',

directory_name => 'WALLET_DIR_LONDON',

private_target => TRUE);

END;

/

select ora_database_name@londondblink from dual;

Thursday, August 11, 2022

Using ADB wallet to hide your sqlplus and sqlldr password

Using the following:

- Autonomous Database (ADB) 19c

- Oracle database client 21c on an OCI VM running OL 8

- Download the ADB wallet from the OCI console and unzip to a empty directory

- Modify the sqlnet.ora file

- Add the following line “SQLNET.WALLET_OVERRIDE=TRUE”

- Modify the WALLET_LOCATION line, change DIRECTORY="?/network/admin” to point to your new unzip directory. , i.e. DIRECTORY="/home/oracle/Wallet_gfships”

- create a credential to the Wallet for your db user.

- mkstore -wrl /home/oracle/Wallet_gfships -createCredential gfships_high admin youradminpassword (Or use your db user account) this command will ask for the wallet password which is the same password you used to download the wallet from the OCI console.

- set TNS_ADMIN to this wallet directory

- verify connectivity with tnsping

Thursday, June 2, 2022

Scaling OCPUs in Exadata Cloud

Three main ways you can scale the number of OCPUs in Exadata Cloud Service or Cloud at Customer:

- OCI Console

- REST api

- OCI Command Line Interface.

- "Auto Scaling" Script

- Curl

- OCI CLI

- dbaascli

1. "Auto Scaling" Script

This script can be download from Oracle Support via Scale-up and Scale-down automation utility for OCI DB System (ExaCS/ExaCC) (Doc ID 2719916.1)

Oracle DynamicScaling utility can be executed as standalone executable or as daemon on one or more ExaCS compute nodes or ExaC@C vmcluster nodes. By default DynamicScaling is monitoring the CPUs with very limited host impact and if the load goes over the Maximum CPU threshold ("--maxthreshold") for an interval of time ("--interval"), it will automatically will scale-up the OCPU by a factor ("--ocpu") till a maximum limit ("--maxocpu"). If the load goes under the Minimum CPU threshold ("--minthreshold") for an interval of time ("--interval") scale down will be executed util the minimum limit ("--minocpu") of ocpu. If a valid cluster filesystem (ACFS) is provided, DynamicScaling will consider the load of all nodes (where DynamicScaling is running) and it will perform a scale-UP/DOWN based on the cluster node load (average/max). There are several examples in this doc.

2. Curl bash script

Here is a curl script example for a "HTTP PUT". This script changes the number of OCPUs (cores) at the VM cluster level.

"cpuCoreCount" : 6

}

3. OCI Command Line Interface (OCI CLI)

- Install OCI CLI or use the cloud shell which has the cli already installed

- Setup instance principal — instead of using an instance principal you may need to use a userid and token

- Create the script — here is the oci cli command reference manual for cloud-vm-cluster update

4. dbaascli

Tuesday, May 31, 2022

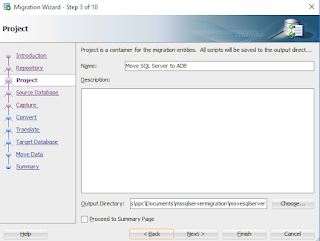

Migrating SQL Server to Oracle Cloud database offline

- SQL Server 2016 running on a Windows server in OCI as the source in online mode

- Autonomous Database on Shared infrastructure as the target in offline mode

- SQL Developer 21.4.3 running on the same server as SQL Server

- SQL Developer documentation

- create connection to the source (SQL Server 2016)

- Setup migration repository, I used a different schema within the target Autonomous Database

- capture source information online

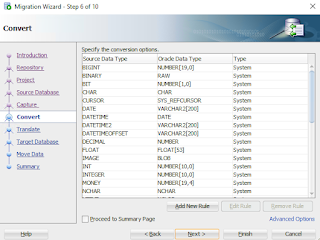

- review convert/translate options for meta info, procedures, triggers, views, ...

- select offline mode for DDL and data

- run the job

- after job is completed run the DDL, extract data from source and load the data to the target

|

| Step 1 -- Overview of migration steps |

|

| Step 2 -- Select migration repository |

|

| Step 4: Select Source in online mode |

|

| Step 5: Select Database(s) |

|

| Step 6: Review data type convert options |

|

| Step 7: Select Objects to migrate, default is all |

|

| Step 8: Select offline to move DDL |

|

| Step 9: Select data to be moved offline |

|

| Step 10: Summary and Start job to migrate |

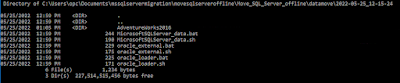

After the job is completed successfully you will want to run the scripts to create the DDL and copy the data to the target database.

|

| In the project directory you will find the DDL and data scripts |

|

| Run the master.sql script on the target which creates the DDL |

|

| These are the scripts to extract and load the data |

| command parameters are IP address of source database, userid and password to connect to source. This command exports the data from source database to files |

Migrating SQL Server to Oracle Cloud database online

- SQL Server 2016 running on a Windows server in OCI as the source

- Autonomous Database on Shared infrastructure as the target

- SQL Developer 21.4.3 running on the same server as SQL Server

- SQL Developer documentation

- create connections to the source (SQL Server 2016) and target (Autonomous Database)

- Setup migration repository, I used a different schema within the target Autonomous Database

- capture source information

- review convert/translate options for meta info, procedures, triggers, views, ...

- create target

- submit the job to create the DDL and move the data

|

| Step 1 -- Info on steps |

|

| Step 2 -- Select repository |

|

| Step 5 -- Select database(s) |

|

| Step 6 -- Convert data types |

|

| Step 7 -- Select objects to be converted |

|

| Step 8 -- Select target |

|

| Step 9 -- Select move data online |

|

| Step 10 -- Start job to migrate |